A question that has haunted humans since the dawn of…well probably about 2014.

What the heck is Expected Goals (xG) and why has my life been invaded by this nonsense?

Here is how Wyscout defines xG in their publicly available glossary of terms:

Expected goals (xG) is a predictive ML model used to assess the likelihood of scoring for every shot made in the game.

For every shot, the xG model calculates the probability to score based on event parameters:

Location of the shot

Location of the assist

Foot or head

Assist type

Was there a dribble of a field player or a goalkeeper immediately before the shot?

Is it coming from a set piece?

Was the shot a counterattack or did it happen in a transition?

Tagger's assessment of the danger of the shot

These parameters (plus a few technical ones) are used to train the xG model on the historical Wyscout data and predict the probability of the shot being scored.

The probabilities range between 0 and 1. A shot of 0.1 xG means a shot like this should be scored 10% of the time. A shot of 0.8 xG means a shot like this should be scored 80% of the time. A penalty xG value is fixed to 0.76.

As Alan and I are fond of repeating on the podcast, all models are bad but some are more useful than others. xG data you encounter, whether it via this site, FotMob, or any other public venue, is simply a model attempting to quantify the probabilities of similar shots becoming goals.

Here is a map of Kyogo Furuhashi’s most recent 75 shots:

People often refer to this as “chance quality” and use it interchangeably. A vital aspect of every model, whether for xG or anything else for that matter, is the underlying assumptions and parameters being used.

With Wyscout, for example, their original model did not include any characterization for the nature of the pass which immediately preceded a shot. Subsequent model enhancements have added that dimension. Just that simple conceptual change was material, as an aerial crossed header that lands about 10 years central to goal is not the same as a throughball on the ground to the same area - though obviously both can result in shots.

Let us review a sequence of shots from the August 25, 2024, victory of 3-0 at St. Mirren, as an example to better flush out these issues.

Now, there was a lot going on here, which is a testament to why it takes Alan Morrison around six hours FOR EVERY GAME to manually collect his data.

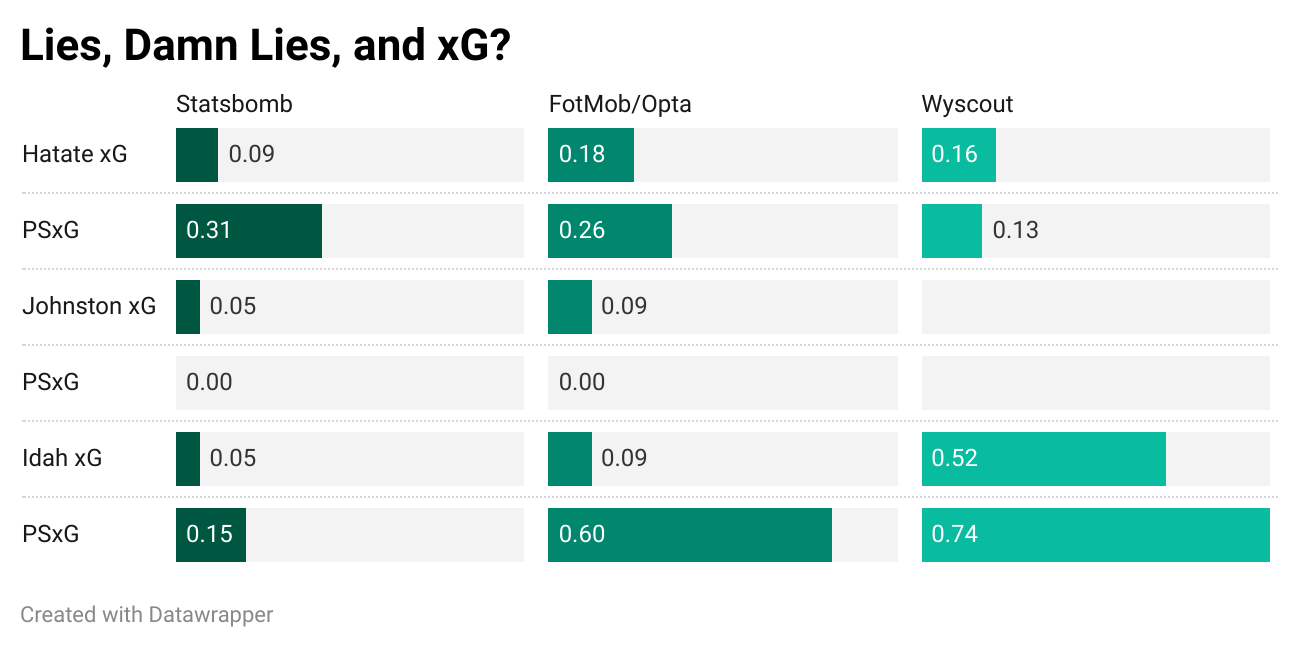

Just for clarity, the sequence of shots was Reo Hatate, followed by a swooping attempt by Alistair Johnston, then another by Adam Idah. Here were the xG and post-shot xG from three different models for the three “chances.”

As one can see, quite a large variation within the models. Heck, Wyscout did not even characterize Johnston’s as a “shot,” which is why that section is blank!

So why the differences? Each model had different assumptions - for example, clubs pay much dinero to Statsbomb to get access to their data and models, as it is widely considered to include the most variable.

For example, their xG and PSxG models incorporate the positioning of defenders, keepers, the height of the ball when the shot was taken, and now even a qualitative characterization of shot velocity.

Go back to the video above and pause around the 9th second when Idah takes his shot and you will see the difference these variables can make. He had a defender squared up to him challenging and in a position to block, is at a relatively narrow angle, and the keeper is well positioned and settled with a relatively clear line of sight to the shot.

The PSxG models from FotMob and Wyscout do not include those contextual variables, and hence the MAJOR disparities.

Do these issues make the FotMob and Wyscout data useless? Ah, dear reader, this is where the domain of analytics enters the equation. “Stats” in a vacuum are indeed vulnerable to being poorly applied or even weaponized.

Another idea Alan and I have shared regularly on the podcast is the relatively low value in single-game data relative to extrapolating predictions for the future. Some of that has to do with the awareness of issues with sample size, but another aspect is a healthy respect for the data, related statistics, and associated model limitations.

Personally, I believe Statsbomb has made enough of a leap forward that many of the single game issues have been materially reduced from a quality perspective. Another leap forward is likely to occur when balls are chipped and optical cameras track their movement/spin/velocity, along with every single player at all times (not just within the purview of TV cameras).

In summary, xG is simply one of many statistical metrics which can be used to try and quantify the quality of chances in football. Across the board, the numbers are not a perfect quantitative reflection of reality. But within a robust analytical process, can be useful across a multitude of associated domains - recruitment, opposition analysis, etc.

Thanks for this.

Is context and game state not important here too?

In the St Mirren example for instance, Celtic get the combined xG for those chances, but you could argue, given they all happen in the same short sequence, we were only ever going to score one goal there, so the xG from missing all of the shots is an inflated representation of our probability of scoring said goal.

Tks. Good article

Is total xg at the end of the game just the total of individual xGs?

E.g if Celtic had an xG at the end of a game of 1.5 that could be ten shots with an xG of 0.15 or 2 shots with an xG of 0.8 and 0.7

If that's the case should xG at the end be divided by no of shots?

Tks again